I've got a new homelab setup. I affectionately call it JWS, or JSON's Web Services. What started as a single core i3 NUC running Ubuntu server in 2017 has become much, much more. Let's take a trip down memory lane and reflect on the beginnings of this journey.

2017: The Initial Lab

Software Development in a small space

For the longest time, I've had this Intel NUC.

- Intel i3-7000 series dual-core chip.

- 32GB RAM.

- 512GB SSD.

- Gigabit Ethernet.

This machine has served me well, and continues to do so. I've run Heroku-like systems (caprover, coolify, dokku) to develop projects I've been working on. I've run database servers, internal git mirrors, hell, even a full-on Gnome Desktop to treat it as a remote linux PC. It's started to show its age, and there's no room for further upgrades. It's also my internal dev machine for my stream projects.

Teaching Workstation (...and gaming)

I started teaching software development bootcamps in 2017, and really got going heading into 2018. At the time, I was running everything via my Intel MacBook Pro, and I wanted to build up a beefier workstation for handling video, software development... and gaming.

I eventually bumped my machine up to Core i7-7700K processor, AMD RTX 580, and tossed 32GB of DDR3 RAM in there. This machine served me very well... until I moved into live streaming. The "lab" was still moreso a collection of client machines and one acting as a true server. I did move to get a Wireless AC router w/ 4 Gigabit ports and started to move to hardwiring all the things in my office setup.

2020: Pull Up & Code

At this point, I've been a remote engineer/manager/instructor since 2017, and when COVID struck in 2020, I got bit with the bug to livestream a hackathon project. And thus, the Twitch channel was born.

However, my setup was rudimentary. Sure, I had a decent setup for teaching, but live teaching over Zoom was less about production quality and more about content delivery and stability. When I started streaming on Twitch in April of 2020, I realized my machine needed a bit of a boost. I also needed to stream via a hardwired setup, because wireless was not reliable. Here, the stream machine was born.

- Intel i7-10700K

- 64GB DDR4 RAM

- (2) 1TB NVMe Drives (in RAID 0 as my Boot)

- (2) 2TB NVMe Drives (Games, Content)

- NVIDIA RTX 2080 Super

- 4TB WD HDD

- Dual 1Gb NIC

- (2) 1080P Elgato Capture PCIe Cards

This machine is not power efficient, but it kicks ass through many workloads and handles streaming with ease. I started with running Windows 10, and using device capture for my MacBook Pro. It's been reliable af.

I've built some fun projects using this machine. I even moved to using WSL 2 and running Ubuntu for development. Teaching remotely? Barely made the fans spin. Data Engineering? Swimmingly well in WSL. I also added some more upgrades, in particular, an RTX 3080 Ti, a new 1200W power supply, and another 2TB NVMe SSD.

Then came my move back to the East Coast.

2022: Fiber, NAS, and Side Quests

fiber? no more xfinity?

In late 2021, I moved to a building that offered up to 2Gb fiber to the home. That speed would make streaming in any quality feasibly (and I had no bandwidth caps, unlike Xfinity). The only downside? The main data panel was in my bedroom closet. My office, however, was on the other side of the apartment. Grrr.

Armed with a 50ft CAT6 cable, a new managed switch, and stubbornness, I set out to get the full use of my fiber connection. My new switch, a QNAP 10Gb switch, was lightning fast, silent, and had a really small footprint. I upgraded to a new mesh system, Linksys Atlas Max, so I could take advantage of the 6E capability of my M1 MacBook Pro. I consistently averaged 1.4 Gbps over wireless. Insane!

My lab, however, would mostly sit there. 2022 was a rough year, so I didn't stream consistently. I had no mental capacity to return to teaching. I barely played games anymore so I didn't even bother with wiring my setup either.

2023: Fresh Air, New Energy

getting life back on track

It's been 8 months since I've started my new role working in observability. I've done a lot to really give myself more space to breathe and live life the way I'd like to. It's been fun to get back in touch with things I enjoy: tinkering with technology, finishing some stream projects, nature photography, travel, and even getting back to livestreaming.

All this time, I was also still paying cloud providers for all of these projects that sat idle in 2022. Amazon RDS clusters with no active connections, AWS Beanstalk pipelines and applications that I hadn't updated, Heroku dynos for applications that were not seeing meaningful use. All in all, I was paying out at least $300 a month across so many places.

a new homelab was born

I figured this was a great opportunity to bring everything in-house and nix all these cloud bills.

I started with going down the r/homelab rabbit hole. I'd been watching Techno Tim's #100DaysofHomelab and more, and the good homies The Alt-F4 Stream a lot of devops content. They also have an insane server setup for their stream, which is really more like a full interactive studio I endeavor to have a form of </fanboy>.

My first focal point was my network setup. Linksys Atlas Max has poor VLAN support and even less options for segmenting a network. I mean, if I'm going to run things in-house, I needed to properly lock some things down (including getting IOT devices off my main network).

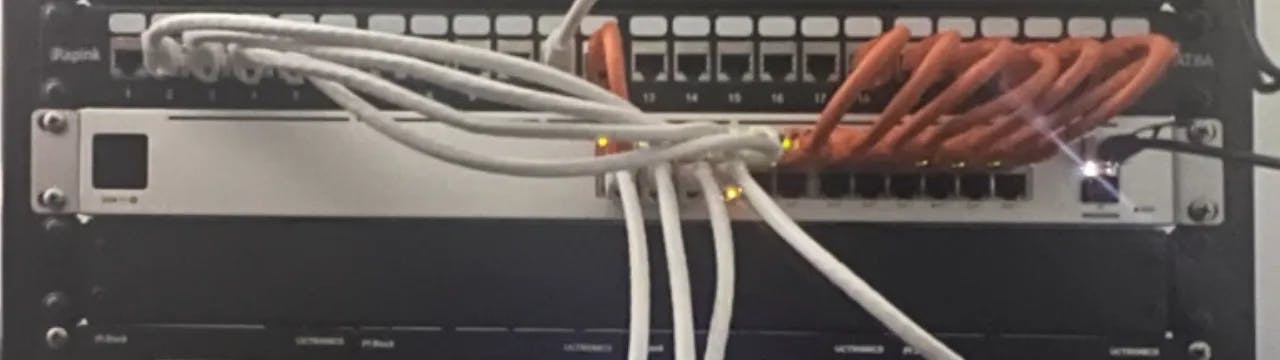

I'll share more about the networking aspect but the full rack now consists of the following:

- Unifi Dream Machine Pro

- Unifi USW 24P w/ PoE and PoE+

- Brocade ICX-7150 24P with PoE

- (2) 24 port patch panels with cat6e

Next focal point - my dev server setup. I started pricing some builds and I didn't like the prices at all. Yet, in another serendipitous moment, I across a sale at Office Depot for Asus SFF (small form factor) PCs.

Here are the specs to the D700SC:

- Core i5-11400 (6 Cores, 12 Threads)

- 512GB NVMe SSD

- 8GB DDR4 RAM (Maximum Supported: 128GB)

- 2x m.2 PCIe 3.0 NVMe

- 1x PCIe® 4.0 x 16

- 2x PCIe® 3.0 x 1

I snapped up 2 of them. I upgraded the RAM to the maximum 128GB and added 2TB SSDs to each machine. They currently run in a 3 node Proxmox cluster (the 3rd node is my trusty NUC, still rolling along). It's been great for learning about all sorts of things, including how to use observability tooling to get metrics and traces from these systems into my account at Honeycomb. I'm going to convert them to 2U rackmount cases so I can be a bit more efficient on rack space (and improve cooling).

In the next post, I'll dive more into my networking setup. In a follow-up, I'll talk about everything I'm hosting on these clusters, as well as my repurposed Raspberry Pi now running in their own rackmount cluster. You can also catch me streaming updates and my journey to using ansible to automate all the things.